Nora Belrose on X: "the @huggingface implementation of swin transformer v2 outputs NaN at initialization when you change the image size or number of channels from the default https://t.co/AXMahI2ptl" / X

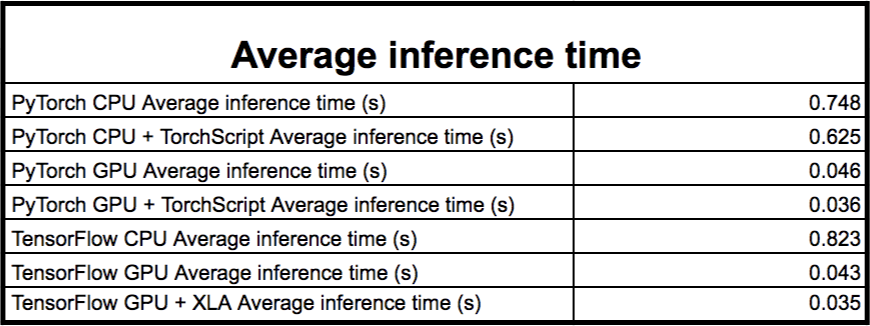

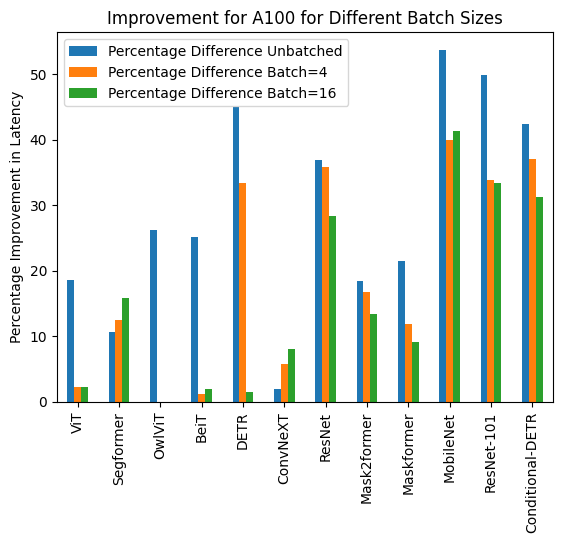

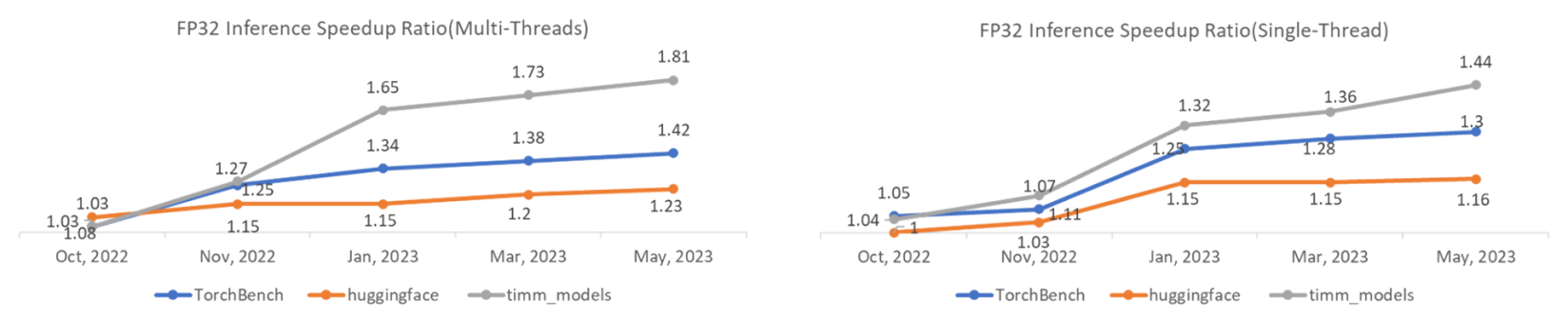

TorchServe: Increasing inference speed while improving efficiency - deployment - PyTorch Dev Discussions

TorchServe: Increasing inference speed while improving efficiency - deployment - PyTorch Dev Discussions

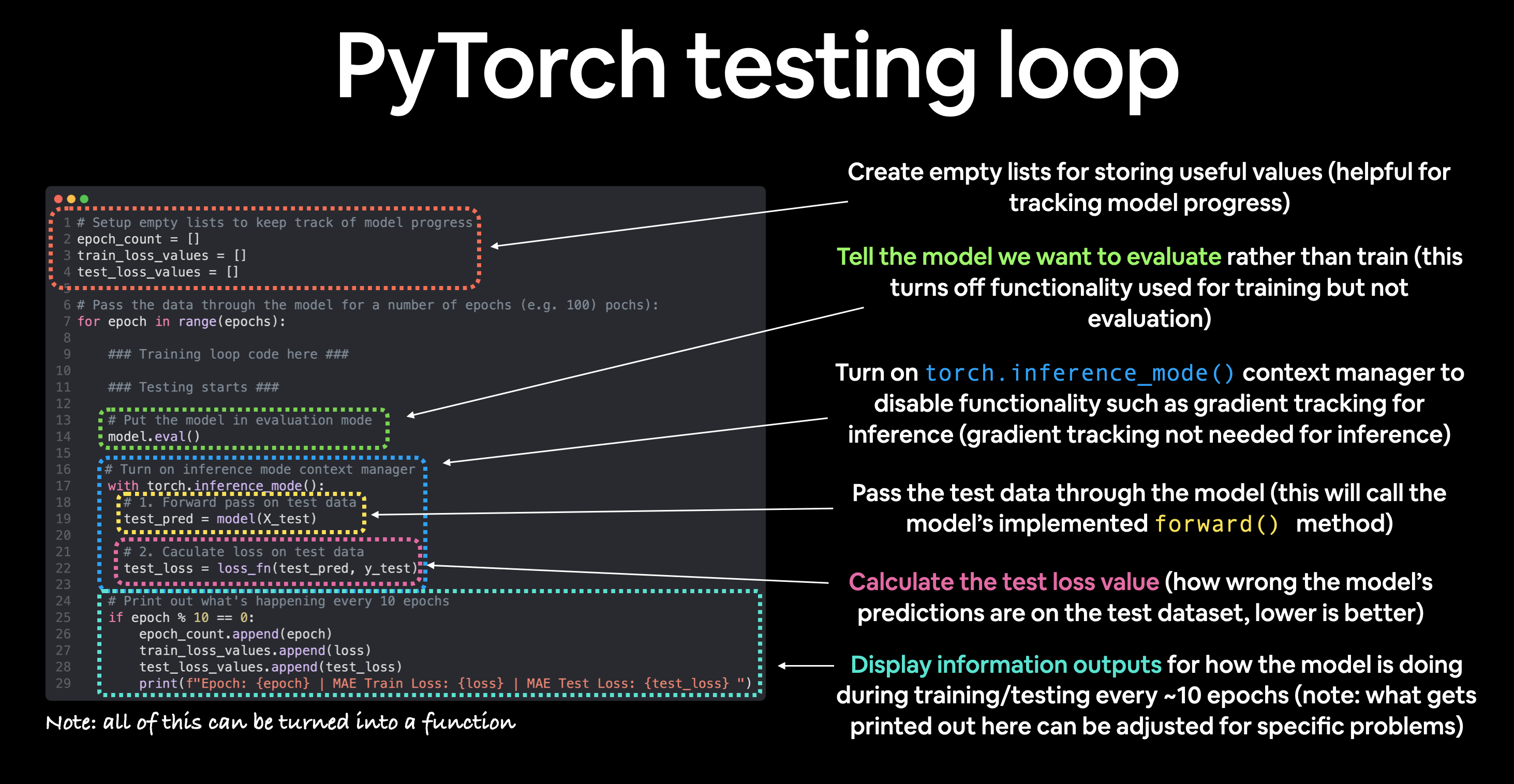

01_PyTorch Workflow - 42. timestamp 5:35:00 - Problem with plot_prediction · mrdbourke pytorch-deep-learning · Discussion #341 · GitHub

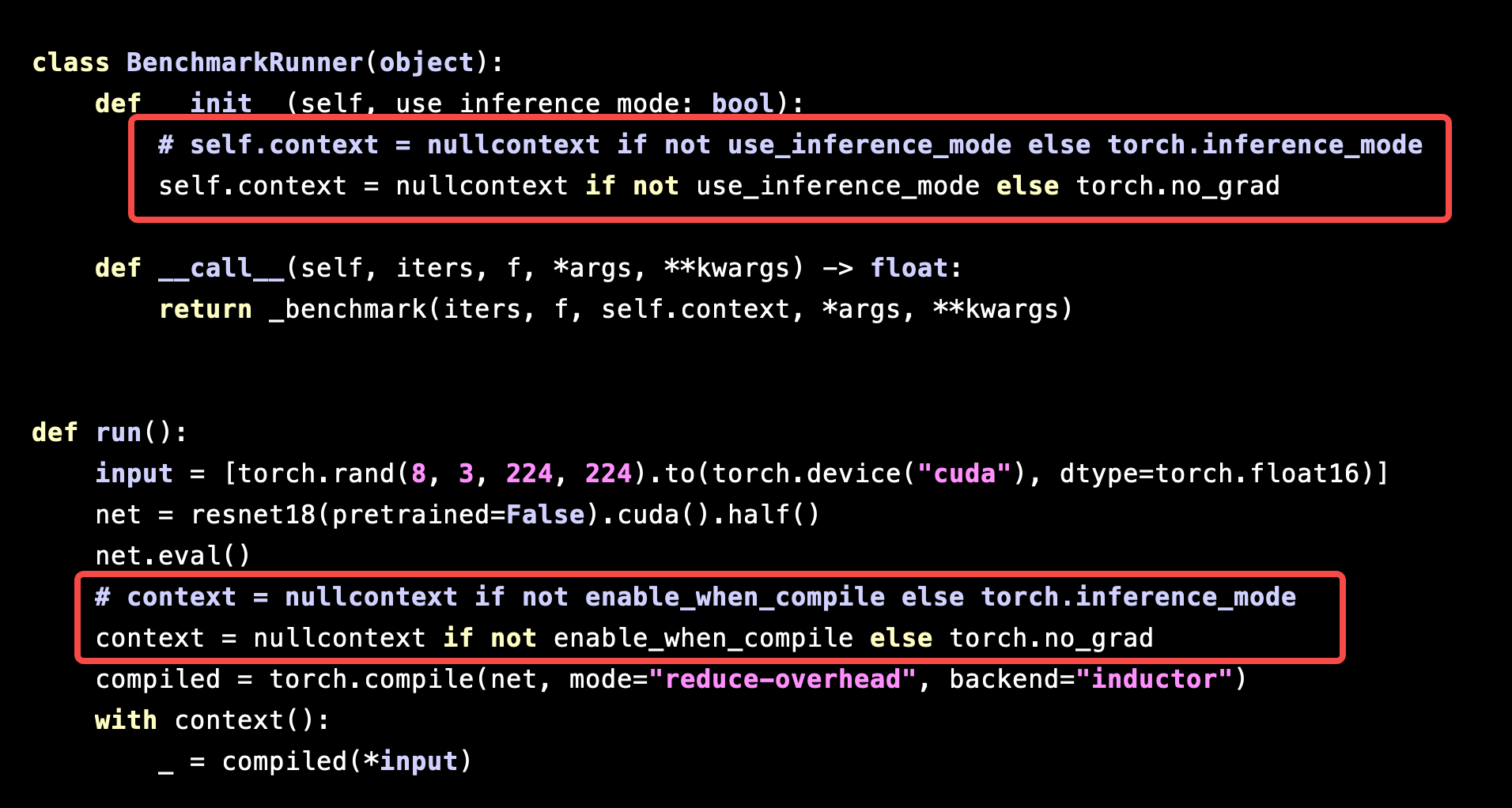

Performance of `torch.compile` is significantly slowed down under `torch.inference_mode` - torch.compile - PyTorch Forums

torch.inference_mode and tensor subclass: RuntimeError: Cannot set version_counter for inference tensor · Issue #112024 · pytorch/pytorch · GitHub

Inference mode complains about inplace at torch.mean call, but I don't use inplace · Issue #70177 · pytorch/pytorch · GitHub